An AI SDK With Push-Button Flow From Model to Inference

Import models from standard frameworks such as Tensorflow, Pytorch, and ONNX with the push of a button with the imAIgine® Software Development Kit. The SDK comes with a Model Garden plus a custom kernel development flow for high-performance computing applications.

Tight Integration With TensorFlow and PyTorch

The imAIgine® Software Development Kit (SDK) enables a push-button flow from deep learning trained model to performant inference implementation on tsunAImi® accelerator cards and runAI® family devices. The imAIgine SDK achieves this by tightly integrating with both TensorFlow and PyTorch, enabling custom neural networks to be quantized and optimized for inference. That is backed by a powerful, flexible, and easy-to-integrate client API to get applications up and running quickly.

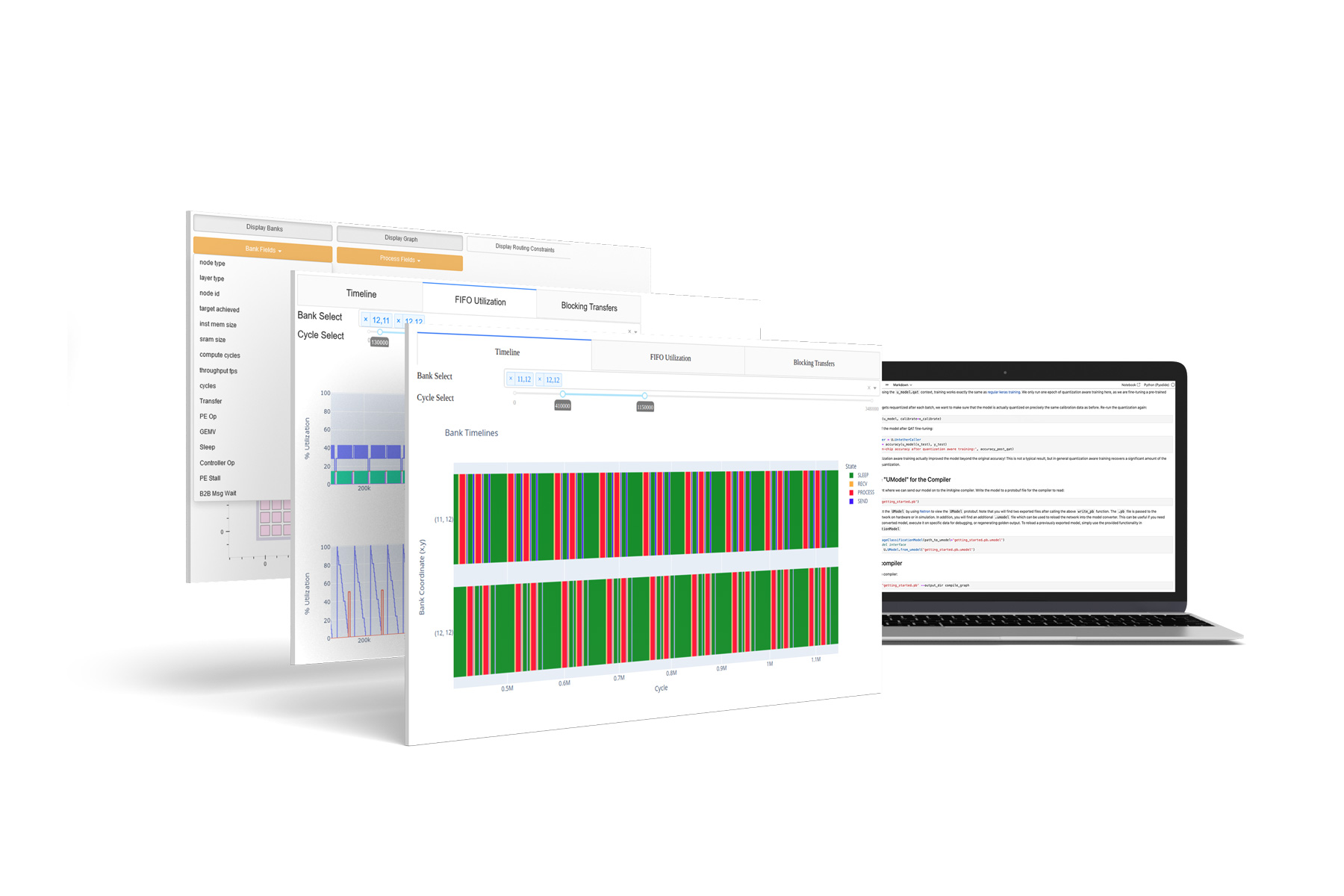

Automated Tools From Pilot to Production

The imAIgine SDK gives developers powerful automated tools and supporting software to quickly go from pilot model to production.

The imAIgine Compiler

- Import TensorFlow or PyTorch graphs directly

- Quantizer and extract performance without sacrificing accuracy

- Specify performance levels, silicon utilization, and power consumption targets

The imAIgine Toolkit

- Evaluate functionality and performance using the extensive profiling and simulation tools

The imAIgine Runtime

- Provides C-based API for integration into your deep learning based applicagtion

- Monitor the health and temperature of the tsunAImi® acceleration cards to ensure proper operation and prevent thermal damage

imAIgine Software Development Kit

The imAIgine® SDK makes it possible to import models from standard frameworks such as Tensorflow, Pytorch, and ONNX with the push of a button. The SDK comes with a Model Garden, and it also features a custom kernel development flow for high-performance computing applications.