Lightning Fast Inference For Everyday Use

OEMs and on-premise data centers can use our AI inference ICs and AI accelerator cards to deploy artificial intelligence just about anywhere. Our proven at-memory architecture provides the highest throughput along with industry-leading power efficiency and cost efficiency.

AI Inference Accelerator Cards

Our AI accelerator cards deliver the superior AI inference performance of our ICs in a PCI-Express form factor and power envelope. Our accelerator cards provide unparalleled performance for the most demanding inference tasks with industry-leading energy efficiency.

speedAI240 Slim Accelerator Card

Introducing the speedAI®240 Slim AI accelerator card, featuring the next-generation speedAI240 IC for superior performance and enhanced accuracy in CNNs, attention networks, and recommendation systems. Its low-profile, 75-watt TDP PCIe design sets the standard as the most efficient edge computing solution, delivering optimal performance and reduced power consumption for various end applications.

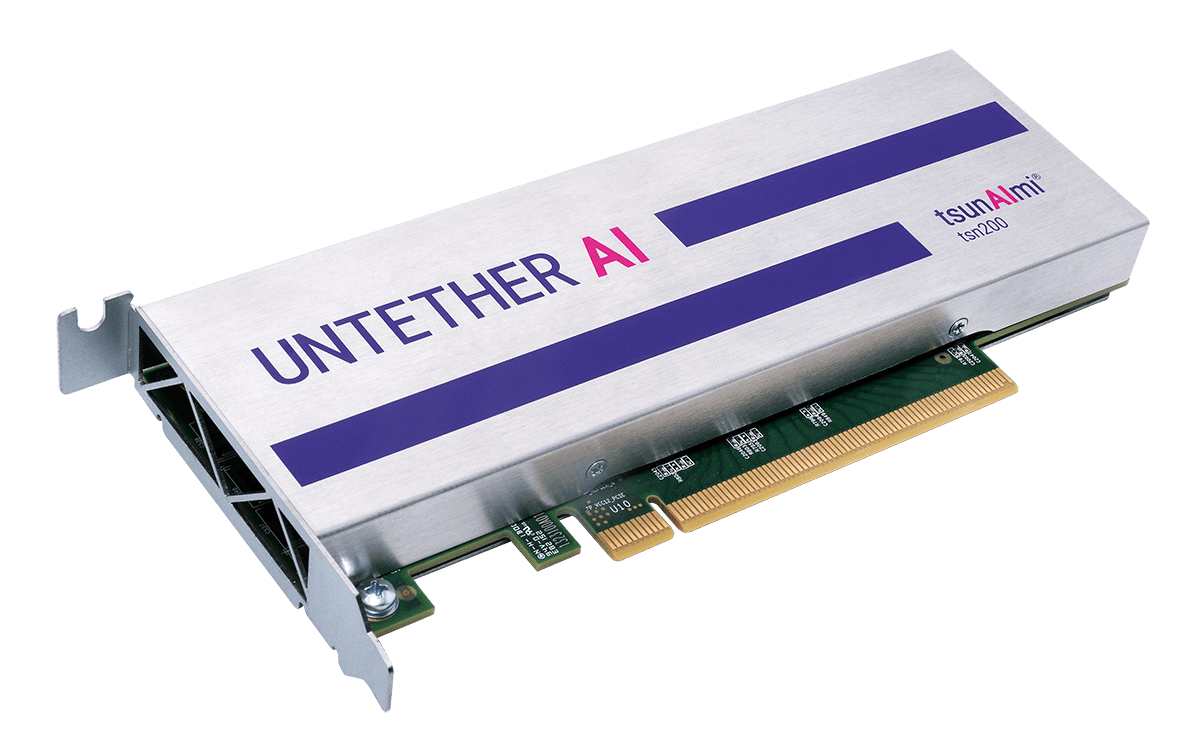

tsunAImi tsn200 Accelerator Card

The heart of the tsunAImi® tsn200 AI accelerator card is a runAI200 device. The card is designed for high-performance, power-sensitive edge applications such as video analytics. The card delivers the same superior performance as the runAI200 IC, but in a low-profile, 75-watt TDP PCIe card. With typical application power of 40W, the tsunAImi tsn200 is the most efficient edge computing card in its class.

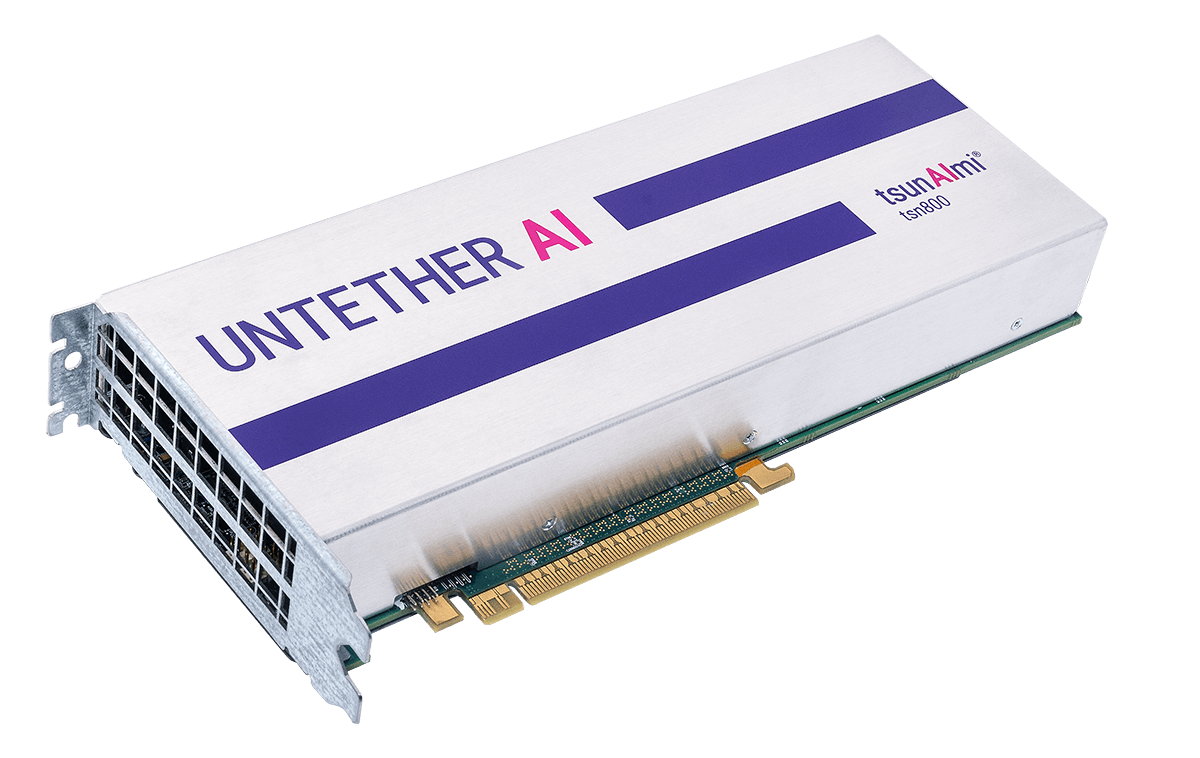

tsunAImi tsn800 Accelerator Card

Powered by four runAI200 devices, the tsunAImi® tsn800 AI accelerator card is built for high-performance servers and data centers hosting applications such as vision-based CNNs, transformer networks for natural language processing, and time-series analysis for financial applications. The extraordinarily efficient 300-watt TDP PCIe card delivers an industry-best compute density of over 2 PetaOps of INT8 performance.

AI Inference Accelerator ICs

Our distinctive at-memory IC architecture puts compute elements directly adjacent to memory cells. This leads to ICs with unrivalled compute density and minimal power consumption. It also results in tremendous operational cost-efficiency, making it practical to apply AI inference to a wide range of uses, including vision AI, autonomous vehicles, AgTech, financial technology, and government sector applications.

The runAI200 Accelerator

The runAI®200, our first-generation IC, is designed for real-time deep learning, appropriate for vision-based convolutional networks, transformer networks for natural language processing, time-series financial analysis, and general-purpose linear algebra for high-performance computing (HPC), and other applications. With 511 custom RISC processors and 204 MB of SRAM, it delivers up to 502 TOPS and up to 8 TOPS per watt.

The speedAI240 Accelerator

The speedAI®240, our second-generation IC now in manufacturing, is similarly suitable for AI inference workloads of all types, but it provides even greater performance and is fine-tuned for greater accuracy, useful in CNNs, attention networks, and recommendation systems. With 1,400 custom RISC-V processors in our unique at-memory architecture, these devices deliver up to 2 PetaFlops of inference performance and up to 20 TeraFlops per watt.